In those days spirits were brave, the stakes were high, men were real men, women were real women and small furry creatures from Alpha Centuari were real small furry creatures from Alpha Centauri. (Douglas Adams, The Hitch-Hikers Guide to the Galaxy)

Another first today: my blog joins the majority of tech blogs which somewhere contain a Douglas Adams quote. I included this quote because my axiom for today is

A SCSI disk array should be a real SCSI disk array. Ditto iSCSI.

(there’s a reason I never became an author)

What I mean by this is that it should behave like a normal block device: a big bucket into which I can pour my data. Of how it organises that data I could not give a flying turd, but for the fact that the underlying structure should be completely transparent to me. It could engrave the data on glass statues of Leonard Cohen for all I care, as long as it’ll give it back to me in thirty milliseconds.

SunOracle‘s ZFS storage arrays, of which the 7110 is a discontinued member, sadly appear to flout this rule. I’m not saying that they’re unique in this, but it’s certainly something to be aware of when planning an installation using them. I should also say at this point that I’ve found the units to be generally good, with the best management interface I’ve ever seen on a storage product, so I’m in no way arbitrarily biased against them.

I first came across this problem when I provisioned a fully-loaded 7110 with an iSCSI LUN that occupied the entire array (this only amounted to about 800G of usable space as I was using RAID 10 for performance). I mounted the LUN on a 2008R2 box running SANsymphony-V (hereinafter known as ‘SSV’). An effect of this is that SSV initialised the entire LUN, writing data across it and causing the 7110 to report the entire thing to be in use. This is expected behaviour: SSV manages thin provisioning within the LUN, so the storage unit underneath won’t be able to tell which bits are in use and which aren’t. If it behaves like a normal SCSI device, this shouldn’t matter.

Unfortunately, almost straight away I started seeing ‘bad block’ messages for this device in the Windows system log. It seemed to be working correctly, SSV wasn’t seeing any errors, and I could read and write data quickly enough… but there was no way I was going to put this arrangement into production when I was seeing messages like that! They seemed to be pretty random and unrelated to load; indeed, they sometimes appeared when the LUN was completely idle.

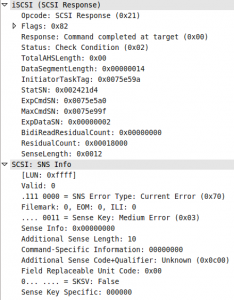

I wanted to see exactly what the array was reporting, as Windows isn’t particularly helpful in that respect. I ran a Wireshark trace on the SSV server (at a quiet time of day, as it’s quite CPU-intensive) to see exactly what was going on. After a bit of digging around I found a few examples of the 7110 reporting errors.

I’m no SCSI expert, but I do know that ‘check condition’ is not a good thing. Another field that caught my eye was the sense key which said ‘media error’—no wonder the server was reporting bad blocks. I was pretty sure that these weren’t real media errors, as the 7110 wasn’t reporting any faults on its management interface. I did some digging and found T10, which is the body responsible for defining the SCSI standard. They have a comprehensive list of ASC codes, so I looked up the code returned by the 7110 (0x0c00). Annoyingly this simply translates as ‘write error’, and what’s more it is not a valid code to return for a disk device!

I logged a call with Oracle, and the official line was

It’s known issue [sic] that if the pool is beyond 80% utilization, ZFS will spend more time trying to allocate some free space for the new writes coming and this can lead [sic] media errors, timeouts, etc.

To be fair, I was already aware of release note RN022 which more-or-less says the same thing (although it doesn’t say I should expect media errors). However, I didn’t really expect this kind of behaviour unless I was using the thing to serve NFS or SMB. To return to my original sentiment, an iSCSI device should look and feel like a big fat disk. Why should I care what filesystem is underneath? Why even use a filesystem at all?

I reconfigured the array to leave 10% free (20% seemed like a bit much to ask on such a small array), and the problem vanished. Good performance on a load test, no more bad blocks, job’s a good ‘un. But I’m not happy.

It seems that, if you want to use one of these arrays as a single iSCSI LUN, there is a significant amount of unusable space that must be kept free if you want decent performance. If you believe Oracle, you have to leave 20% of fallow space, effectively short stroking the array for the sake of error-free performance. If you don’t, you’ll get inexplicable—and, if I’m reading the T10 document correctly, protocol-violating—errors from the array, leaving you wondering what to do next.

Without getting all Freudian: why can’t an array just be an array?

Leave a Reply